💔 Is AI Making Us Meaner?

Research suggests there are unintentional spillover effects of chatting with LLMs

As any new technology emerges, people naturally worry about job displacements, new challenges to human flourishing, and threats to traditional ways of life.

The internet gave us instant access to practically any information we need around the world, but it also gave crackpots and criminals a great way to coordinate fresh harms to society. Similarly, social media has become a powerful entertainment tool, which is fine until it eats into the hours you should be spending on other worthwhile activities like going for walks or sleeping.

With the growing popularity of generative AI, these kinds of questions are once again at the forefront of many conversations. Although large language models (LLMs) are practical assistants in daily life, they carry their own risks for well-being and social health. People are understandably worried about how automated content generation affects human creativity and impacts the lives of creators, writers, and artists.

Those are legitimate concerns to consider and discuss, but there’s another question most of us have been ignoring: could the way we talk to AI reshape how we communicate with the humans around us?

🤖 How AI chats may be setting a new tone

Language models like ChatGPT are growing in daily ubiquity, with practically every major tech company now offering their own versions. One of their major advantages is that they’re highly efficient and intuitive information points. When I have a question, I now ask an LLM 1-2 questions to find out the answer instead of trying to locate and read several pages of relevant info with a search engine.

As more people adopt LLMs in daily life, it’s possible we’ll spend just as much time talking to AI as we do talking to other humans (maybe more on some days!). Since we’re likely to act differently with AI vs humans, this could start to shift our communicational norms and affect our interactions with people.

In a study published in September 2024, researchers tested this exact idea. They recruited 500 online participants and asked each of them to come up with a funny caption for a photo. In coming up with this caption, each participant was told to collaborate with either an AI partner (ChatGPT) or another supposed human partner (in reality, also ChatGPT).

After collaborating over chat to create their captions, each participant was asked to rate the quality of another human participant’s funny caption on a scale from 1 (very poor) to 10 (exceptional). This test caption was actually fixed and identical for all participants.

So every participant did the same thing in the experiment; the only difference was that some believed they were collaborating with AI while others believed they were collaborating with a human. How would this difference in belief affect the quality of people’s conversations, and would it change their judgments of another person’s work?

First of all, using an analysis known as natural language processing, the researchers tested how conversations differed when people believed they were talking to AI vs humans. Interestingly, there was no difference in politeness. People weren’t particularly rude to ChatGPT, and in fact, they were more likely to use the word “please” in this specific condition vs the human chat condition.

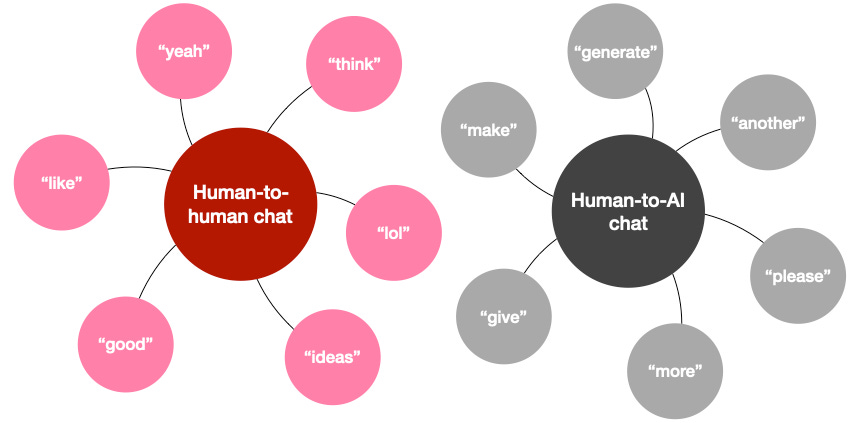

As you might expect though, people were more demanding, more goal-oriented, and more task-focused when talking to AI. People spent less time on informal and relaxed chat like “hello”, “yeah” and “lol”, and were more likely to rush to business on the task they were set by primarily using words like “generate” and “make”. Importantly, the conversations with AI also had a less positive emotional tone. You can see the kinds of words that dominated people’s conversations in the visual below.

Now for the more central research question: did participants behave differently when rating other people’s content just because they had been chatting with AI beforehand?

Participants rated other people’s captions as significantly worse after talking with AI compared to talking with humans. On the 1-10 scale, ratings were approximately 1 point more generous after participants collaborated with what they believed to be a fellow human rather than AI.

In other words, it’s possible that the way we perceive and interact with AI agents can indeed affect our judgments about other people. When we get used to a more demanding and less joyful tone in speaking to ChatGPT, we become harsher critics of the people and events around us too.

Since the researchers didn’t test how judgments change over time after talking with AI, it’s possible this spillover effect disappears as we reconnect with humans through the day. But you never know how important that very next conversation will be—it only takes a moment of poorly calibrated conversation or judgment to mess things up, and if you adapt to talking to ChatGPT right before a job interview or date, you might not get a second chance. And of course, as we spend more time talking to AI over the years, the challenge is likely to become a bigger one.

Generative AI comes with many productivity benefits, but it’s always worth keeping your eye on the possible risks and mitigating them when they’re likely to affect you. At least for now, ChatGPT won’t be a good replacement for broken social connections.

⭐️ Takeaway tips

#1: Reflect on your tone after using LLMs

When we talk to AI, our language choices have a different emotional tone and quality compared to when we talk to other humans. As we adapt to that mode of operation, other aspects of our behavior and judgment are likely to follow in tow. Since we’re highly demanding and goal-oriented with AI, we may develop unintentionally harsh expectations and judgments that linger in other areas of our lives. Be aware of these changes in mindset and adjust accordingly.

#2: Consider a cool-down period

If you work a lot with generative AI, you may want to consider adding a buffer period between that and any important interactions with other people. The conversational styles and emotional reactions you have while using AI may unintentionally carry over into other areas of your life. Adding a delay before engaging in those more human-focused tasks may help you to reset.

#3: There’s nothing dumb about being human with AI

The research focused on conversational spillover effects from talking with AI to talking with humans. However, you’ll often hear people joke about the reverse pattern in their behavior, like when they ask ChatGPT how it’s doing or wish it a good day after a brief conversation. We can all see the humor in this, but it may not be so silly after all. Maintaining your sense of human charm as you interact with a robot may be one good way to buffer against some of the downsides of adapting to machine talk.

“Each friend represents a world in us, a world possibly not born until they arrive, and it is only by this meeting that a new world is born.”

~ Anaïs Nin